About me

Hello 👋, I'm Olly Smith, a seasoned full-stack software engineer with two decades of experience in the tech industry. During my career, I've had the privilege of working at some of the world's most impactful companies, including a tenure at Google spanning over a decade.

Projects

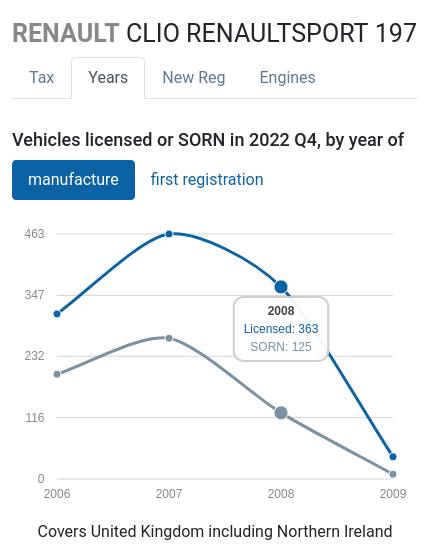

How Many Left?

How Many Left? is a database and search engine of statistics about cars, motorcycles, and commercial vehicles registered in the United Kingdom. It's based on public data published by the Department for Transport. It's become an invaluable tool for motoring enthusiasts in the UK and has even been featured on BBC's Top Gear TV programme.

Writing

- Test remote javascript APIs with Capybara and puffing-billy

- Install Ruby Enterprise Edition 1.8.7 in OS X Mountain Lion

- OBDII Wi-Fi Hacking

- Graceful server shutdown with node.js / express

- Debian Wheezy Killed My Pogoplug

- Make TimeMachine in OS X Lion work with Debian Squeeze (stable) netatalk servers

- PyOpenSSL in a virtualenv on OS X

- Running openoffice/libreoffice as a service

- My Bank Holiday Project: Tweet GP

- Using connect-assetmanager with sass and coffee-script

- Embedding fonts in Flex apps

- Step 1: complete

- Looking promising...

- More results

- First results

- Time for a project...

- Netatalk on an Ubuntu virtual machine

- RESTful Services in Visual C# 2008

- Subversion and Visual C++